>Table of contents

Spatial and Gray-Level Resolution:

Sampling is the principal factor determining the spatial resolution of an image. Basically, spatial

resolution is the smallest discernible detail in an image. Suppose that we construct a chart with

vertical lines of width W, with the space between the lines also having width W.A line pair

consists of one such line and its adjacent space. Thus, the width of a line pair is 2W, and there

are 1/2Wline pairs per unit distance. A widely used definition of resolution is simply the smallest

number of discernible line pairs per unit distance; for example, 100 line pairs per millimeter.

Gray-level resolution similarly refers to the smallest discernible change in gray level. We have

considerable discretion regarding the number of samples used to generate a digital image, but

this is not true for the number of gray levels. Due to hardware considerations, the number of gray

levels is usually an integer power of 2.

The most common number is 8 bits, with 16 bits being used in some applications where

enhancement of specific gray-level ranges is necessary. Sometimes we find systems that can

digitize the gray levels of an image with 10 or 12 bit of accuracy, but these are the exception

rather than the rule. When an actual measure of physical resolution relating pixels and the level

of detail they resolve in the original scene are not necessary, it is not uncommon to refer to an Llevel

digital image of size M*N as having a spatial resolution of M*N pixels and a gray-level

resolution of L levels.

Fig.7.1. A 1024*1024, 8-bit image subsampled down to size 32*32 pixels The number of

allowable gray levels was kept at 256.

The subsampling was accomplished by deleting the appropriate number of rows and columns

from the original image. For example, the 512*512 image was obtained by deleting every other

row and column from the 1024*1024 image. The 256*256 image was generated by deleting

every other row and column in the 512*512 image, and so on. The number of allowed gray

levels was kept at 256. These images show the dimensional proportions between various

sampling densities, but their size differences make it difficult to see the effects resulting from a

reduction in the number of samples. The simplest way to compare these effects is to bring all the

subsampled images up to size 1024*1024 by row and column pixel replication. The results are

shown in Figs. 7.2 (b) through (f). Figure7.2 (a) is the same 1024*1024, 256-level image shown

in Fig.7.1; it is repeated to facilitate comparisons.

Fig. 7.2 (a) 1024*1024, 8-bit image (b) 512*512 image resampled into 1024*1024 pixels by

row and column duplication (c) through (f) 256*256, 128*128, 64*64, and 32*32 images

resampled into 1024*1024 pixels

Compare Fig. 7.2(a) with the 512*512 image in Fig. 7.2(b) and note that it is virtually impossible

to tell these two images apart. The level of detail lost is simply too fine to be seen on the printed

page at the scale in which these images are shown. Next, the 256*256 image in Fig. 7.2(c) shows

a very slight fine checkerboard pattern in the borders between flower petals and the black background.A slightly more pronounced graininess throughout the image also is beginning to appear.

These effects are much more visible in the 128*128 image in Fig. 7.2(d), and they become

pronounced in the 64*64 and 32*32 images in Figs. 7.2 (e) and (f), respectively.

In the next example, we keep the number of samples constant and reduce the number of gray

levels from 256 to 2, in integer powers of 2.Figure 7.3(a) is a 452*374 CAT projection image,

displayed with k=8 (256 gray levels). Images such as this are obtained by fixing the X-ray source

in one position, thus producing a 2-D image in any desired direction. Projection images are used

as guides to set up the parameters for a CAT scanner, including tilt, number of slices, and range.

Figures 7.3(b) through (h) were obtained by reducing the number of bits from k=7 to k=1 while

keeping the spatial resolution constant at 452*374 pixels. The 256-, 128-, and 64-level images

are visually identical for all practical purposes. The 32-level image shown in Fig. 7.3 (d),

however, has an almost imperceptible set of very fine ridge like structures in areas of smooth

gray levels (particularly in the skull).This effect, caused by the use of an insufficient number of

gray levels in smooth areas of a digital image, is called false contouring, so called because the

ridges resemble topographic contours in a map. False contouring generally is quite visible in

images displayed using 16 or less uniformly spaced gray levels, as the images in Figs. 7.3(e)

through (h) show.

Fig. 7.3 (a) 452*374, 256-level image (b)–(d) Image displayed in 128, 64, and 32 gray levels,

while keeping the spatial resolution constant (e)–(g) Image displayed in 16, 8, 4, and 2 gray

levels.

As a very rough rule of thumb, and assuming powers of 2 for convenience, images of size

256*256 pixels and 64 gray levels are about the smallest images that can be expected to be

reasonably free of objectionable sampling checker-boards and false contouring.

The results in Examples 7.2 and 7.3 illustrate the effects produced on image quality by varying N

and k independently. However, these results only partially answer the question of how varying N

and k affect images because we have not considered yet any relationships that might exist

between these two parameters.

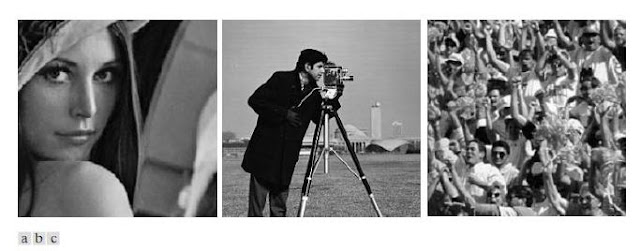

An early study by Huang [1965] attempted to quantify experimentally the effects on image

quality produced by varying N and k simultaneously. The experiment consisted of a set of

subjective tests. Images similar to those shown in Fig.7.4 were used. The woman’s face is

representative of an image with relatively little detail; the picture of the cameraman contains an

intermediate amount of detail; and the crowd picture contains, by comparison, a large amount of

detail. Sets of these three types of images were generated by varying N and k, and observers

were then asked to rank them according to their subjective quality. Results were summarized in

the form of so-called isopreference curves in the Nk-plane (Fig.7.5 shows average isopreference

curves representative of curves corresponding to the images shown in Fig. 7.4).Each point in the

Nk-plane represents an image having values of N and k equal to the coordinates of that point.

Fig.7.4 (a) Image with a low level of detail (b) Image with a medium level of detail (c) Image

with a relatively large amount of detail

Points lying on an isopreference curve correspond to images of equal subjective quality. It was

found in the course of the experiments that the isopreference curves tended to shift right and

upward, but their shapes in each of the three image categories were similar to those shown in Fig. 7.5. This is not unexpected, since a shift up and right in the curves simply means larger values for N and k, which implies better picture quality.

Fig.7.5. Representative isopreference curves for the three types of images in Fig.7.4

The key point of interest in the context of the present discussion is that isopreference curves tend

to become more vertical as the detail in the image increases. This result suggests that for images

with a large amount of detail only a few gray levels may be needed. For example, the

isopreference curve in Fig.7.5 corresponding to the crowd is nearly vertical. This indicates that,

for a fixed value of N, the perceived quality for this type of image is nearly independent of the

number of gray levels used. It is also of interest to note that perceived quality in the other two

image categories remained the same in some intervals in which the spatial resolution was

increased, but the number of gray levels actually decreased. The most likely reason for this result

is that a decrease in k tends to increase the apparent contrast of an image, a visual effect that

humans often perceive as improved quality in an image.

>Table of contents